In their paper published in the journal Science Robotics, Fan Zhang and Yiannis Demiris described the approach they used to teach the robot to partially dress the mannequin. Júlia Borràs, with Institut de Robòtica i Informàtica Industrial, CSIC-UPC, has published a Focus piece in the same journal issue outlining the difficulties in getting robots to handle soft material and the work done by the researchers on this new effort.

As researchers and engineers continue to improve the state of robotics, one area has garnered a lot of attention—using robots to assist with health care. In this instance, the focus was on assisting patients in a hospital setting who have lost the use of their limbs. In such cases, dressing and undressing falls to healthcare workers. Teaching a robot to dress patients has proven to be challenging due to the nature of the soft materials used to make clothes. They change in a near infinite number of ways, making it difficult to teach a robot how to deal with them. To overcome this problem in a clearly defined setting, Zhang and Demiris used a new approach.

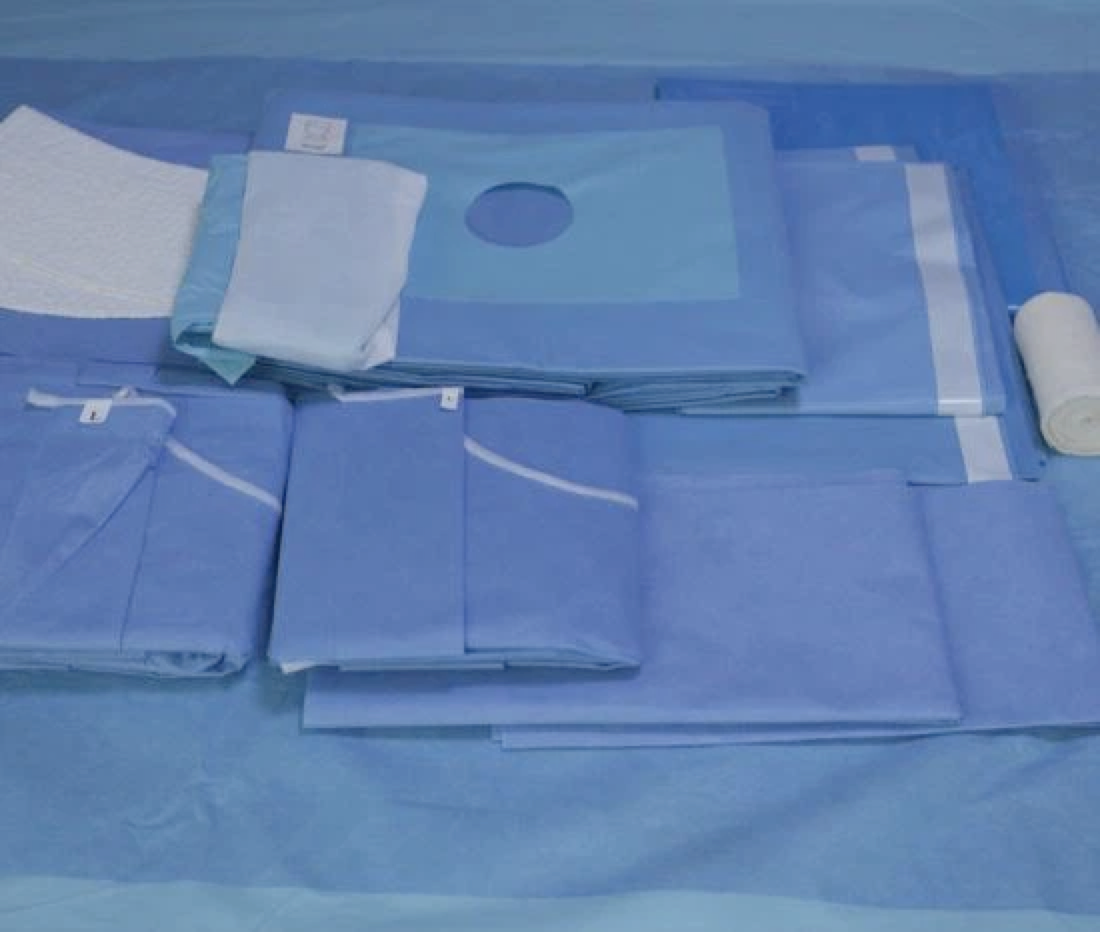

The setting was a simulated hospital room with a mannequin lying face up on a bed. Nearby was a hook affixed to the wall holding a surgical gown that is worn by pushing arms forward through sleeves and tying in the back. The task for the robot was to remove the gown from the hook, maneuver it to an optimal position, move to the bedside, identify the "patient" and its orientation and then place the gown on the patient by lifting each arm one at a time and pulling the gown over each in a natural way.

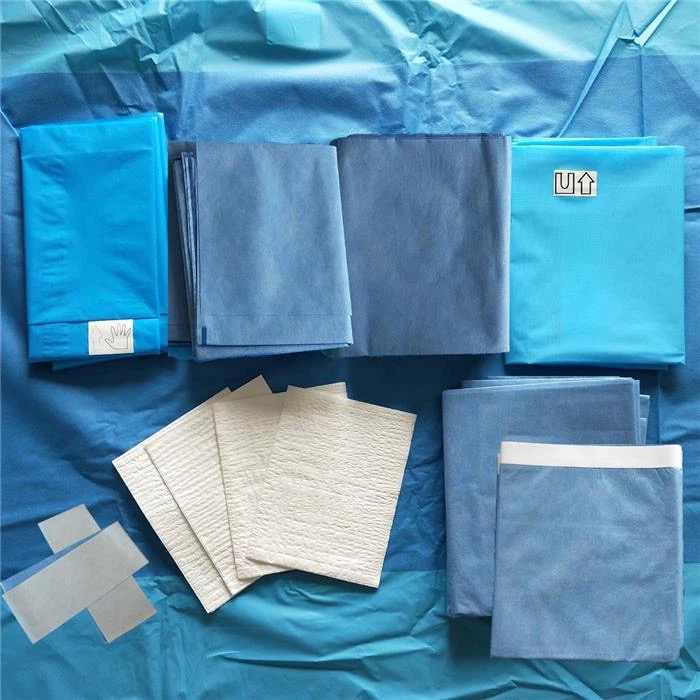

To teach a robot to carry out the series of tasks, the researchers broke down the sequence into a series of steps, one of which involved teaching it how to grasp one single layer of the gown rather than the gown as a single entity, which could have multiple layers when folded. They also created multiple models to use as teaching elements that showed the robot how to differentiate between colors and textures. They then used the models to show the robot the difference between simulations and videos of the desired activity by training it repeatedly to choose between different options.

In testing their approach, they found they could teach the robot to properly dress the mannequin approximately 90% of the time over 200 trials.